Introduction

A while ago, I created and shared an out-of-the-box project that was cost-effective to run, free to download and use, and easy to maintain. You can read the original blog post here.

A lot has happened since then. Several bugs have been fixed, and new features and customization options have been added. Although I still have a backlog of features I would like to implement, I think it is time to write an update covering the project as it stands today with the new features and options.

The core concept remains the same: To provide a simple solution for notifying the owner and organization about service principals with expired or soon-to-expire secrets or certificates.

The project is designed so that you can download it from GitHub, adjust the required variables to suit your needs, and deploy it with Terraform. If you are new to Terraform, you can check out my guide to getting started here. All you need is a basic understanding of Terraform to deploy it in your environment.

There are a lot of configuration combinations available. Therefore, I will not cover all of them in this post, but instead create a series of shorter blog posts explaining the different scenarios.

Prerequisites

The prerequisites to enable this solution are fairly limited, as you primarily need permissions to deploy the solution and create a service principal for handling lookups in Entra ID.

The requirements for implementing this solution are listed below.

User running Terraform – these permissions are only required during deployment or updates:

- Create new service principal.

- Create new resource group.

- Set permission on resource group and resources inside the resource group.

- Add secret to key vault (e.g. key vault secrets officer).

Service principal created using Terraform:

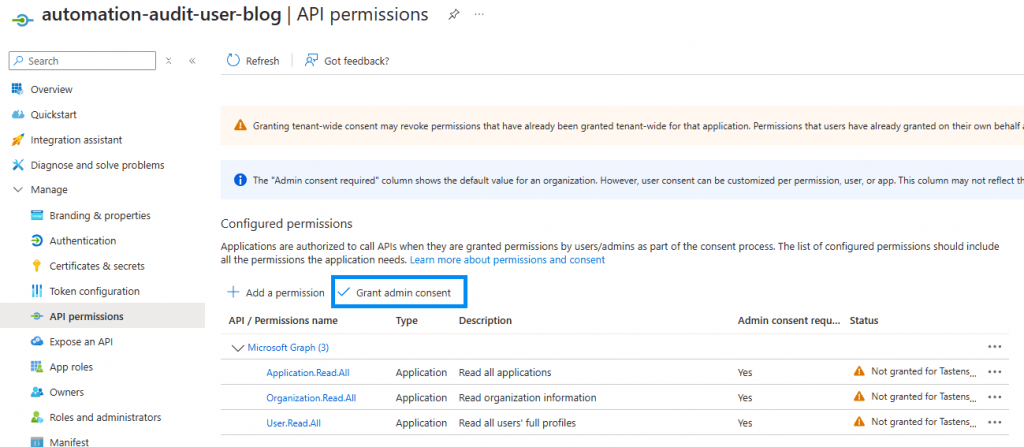

- API permissions (someone needs to approve these AFTER Terraform has run).

- Microsoft Graph

- Application.Read.All (Microsoft docs)

- This is used to get the required information about when a secret or certificate is expiring and who the owner of the service principal is.

- Organization.Read.All (Microsoft docs)

- User.Read.All (Microsoft docs)

- This is required to find information about the service principal owner.

- Application.Read.All (Microsoft docs)

- Microsoft Graph

Terraform

The client executing the code must have Terraform installed. Terraform is free to use and can be installed using the Install Terraform guide on the HashiCorp homepage.

You can read my guide on getting started here.

Azure CLI

To use Terraform with Azure, you need the Azure CLI. Please refer to Microsoft Learn for instructions on installation.

Files

After the Terraform code is deployed, the permissions required by the deploying user are no longer needed and can safely be removed.

Project

Each step in the process will be described so that you understand exactly what you are deploying.

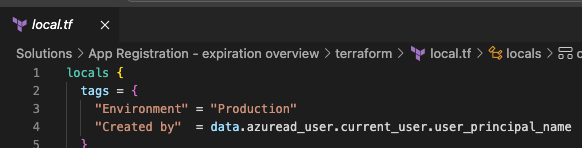

As mentioned earlier, we use Terraform to deploy the needed infrastructure. All variables are defined in the “variable.tf” file except for tags which are maintained in the local file. By default, it will deploy all resources with the following two tags:

- Environment = Production

- Created by = User running the deployment

You can add or remove tags, but for now you have to change them in the local.tf file.

Confidential information will be securely stored in a key vault while all other variables will be saved in the variable section of the automation account. Terraform will save the variables in the respective locations.

The concept drawing from version 1 is still the same since we still require the same resources. If you are running in version 1 now, you can simply download the newest version, configure the new variable.tf file, and run Terraform apply. All resources can be upgraded.

Explaining the design

Let’s have a look at what happens. I will go through it in details later in this post.

Step 1 – Triggering the automation account

The automation account will be triggered by the method you choose – either by a timer or by a manual trigger. Once triggered, it will execute a PowerShell script that uses the managed identity to gain access to both the key vault and the storage account. The script will retrieve the secret for the service principal with Entra ID access from the key vault.

Step 2 – Collecting and sorting data

Once the secret is obtained, the script will connect to the MS Graph module using the service principal. It will gather a list of all service principals, their owners, and whether there are any expiring secrets or certificates associated with them.

Based on the Boolean settings defined in the variable section, the script will perform one or more of the following actions in the below order:

- Expired secrets and certificates: The script will identify all service principals with already expired secrets or certificates. This list will be sent to the stated contact email.

- About-to-expire secrets and certificates: The script will compile a list of all service principals with secrets or certificates that are about to expire. This list will also be sent to the stated contact email.

- Orphaned service principals: The script will identify all service principals without defined owners. This list will include a column indicating whether the specific service principal contains any secrets or certificates. The list will be sent to the stated contact email.

- Notify Owners: The script will iterate through all service principals. If it finds any service principals with expiring secrets or certificates and an assigned owner, it will store the owner’s information and the relevant secret details in an array. After processing all service principals, it will send an email to each owner’s stated email address. The timing of these emails is controlled by the

email_inform_owners_days_with_warningsvariable which specifies the number of days before expiry when notifications are sent.- Owners, where the secret has not yet expired, will be notified first, whereas owners, where the secret is already expired and where they should be aware of the issue, are notified last.

Why this particular order? For the deployments where a Microsoft-supplied domain are used, there are some fairly strict limitations on the number of e-mails. Therefore, to ensure that the most critical emails are sent in these use cases, the above order was selected.

Step 3 – Storing the data we need for later

The lists created in the previous step will be saved as CSV files in the storage account. These files will be used in a later stage by the Logic App.

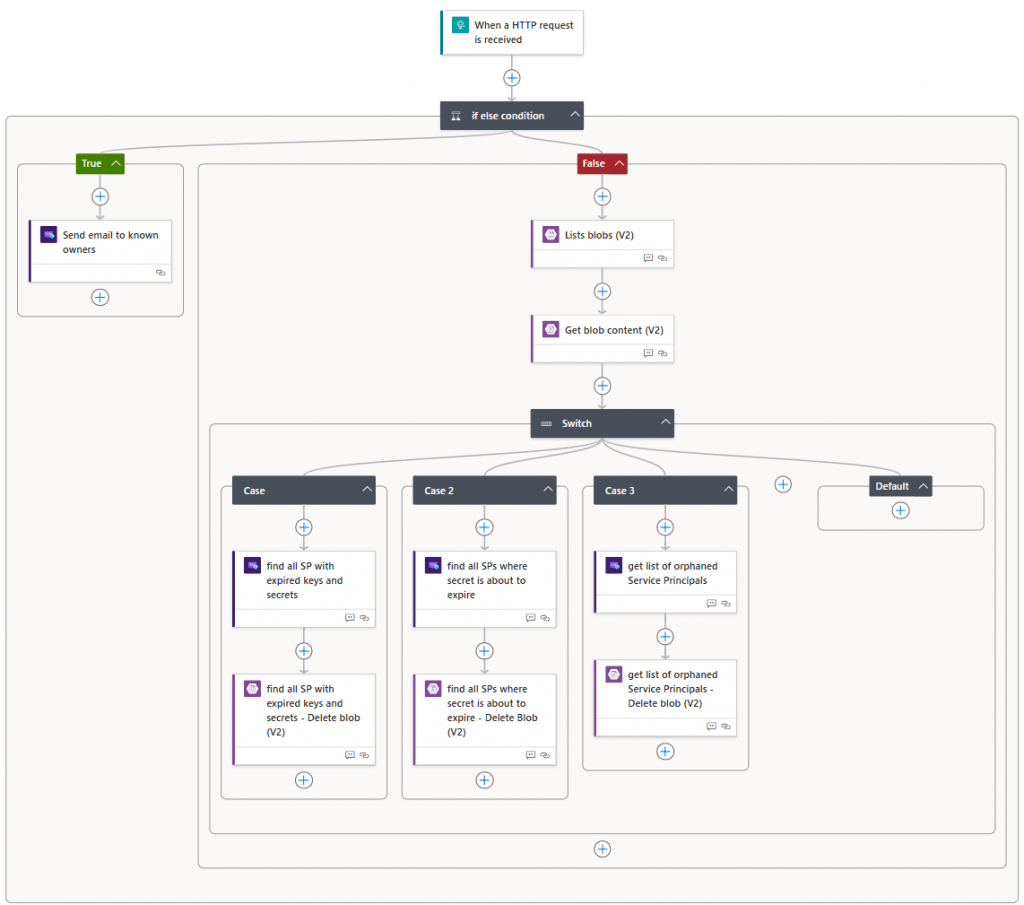

Step 4 – Triggering the Logic App

For each of the above tasks, we are going to trigger the workflow in the Logic App. The Logic App will trigger based on “request type” in the object that is created in the PowerShell script.

Depending on which call is sent, they will be handled differently in the Logic App. The managed identity of the Logic App will require permissions for the storage account to get the CSV files created in step 2.

Step 5 – Sending the notification

The Logic App will forward the message to the Azure Communication Services environment with the emails, CSV files (where applicable) and other information gathered with the PowerShell script.

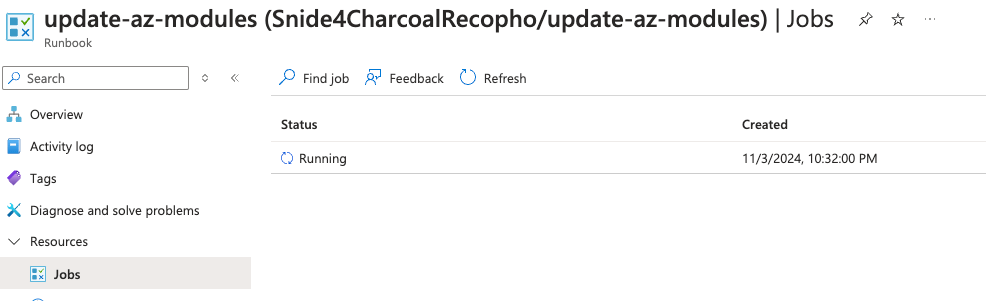

Updating the automation account modules

After creating the resources, a new runbook has been added to the automation account. This is necessary because some AZ modules are not installed in the automation account for PowerShell 7.2 by default. Although these modules are not required for the scripts to run, they cause errors in the log, as they are listed as “required modules” in the AZ base module.

To prevent these errors, the new runbook will run 10 minutes after the initial deployment to update and install the correct modules. It will only take a few minutes to complete.

This process only needs to run once.

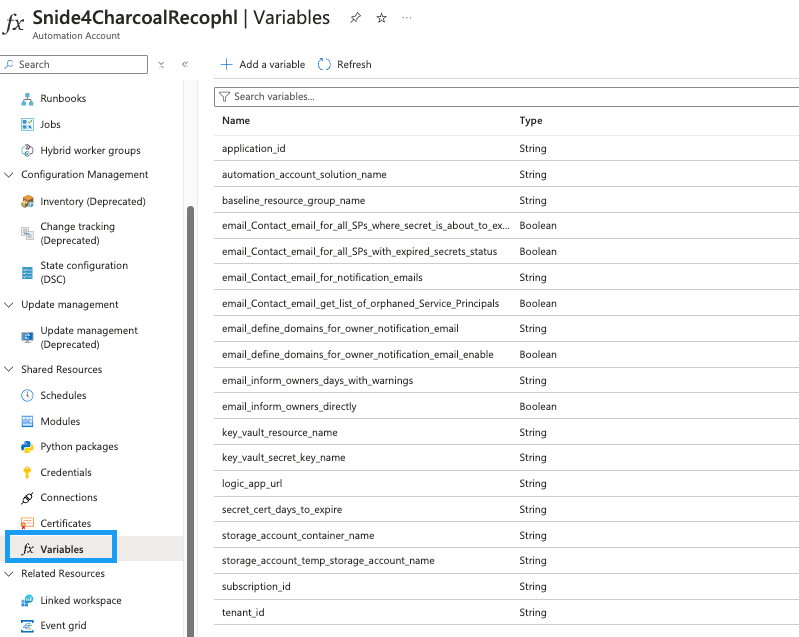

Variables

Let’s take a look at the variables and what they do.

All relevant variables will be stored inside the automation account or key vault.

You can set tags that you need in the locals file or just keep it empty.

Different variable types

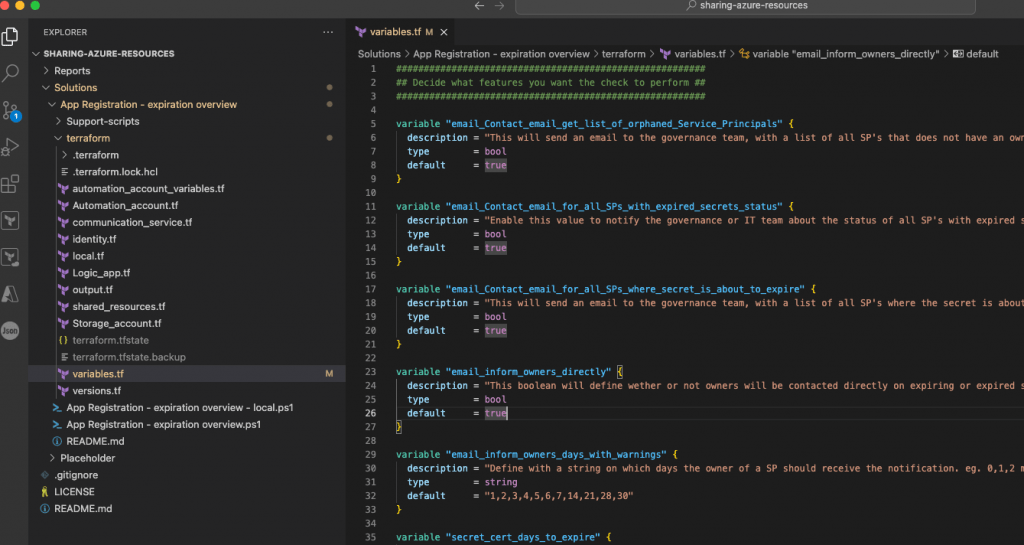

There are multiple variables in the project. Some of them need to be changed, and some of them can be changed if you prefer a specific behavior.

You don’t have to read through this section. All the descriptions can be found on each variable in the variables.tf file or after deployment in the automation account variables section.

Requires customization

email_Contact_email_for_notification_emails

- Description: This is the e-mail address that should be used to send a message about all the expiring secrets/certs where an owner could not be found: Note: they will be send as an attachement in CSV format

- Type: String

subscription_id

- Description: Provide subscription id for deployment

- Type: String

tenant_id

- Description: Provide tenantid for the specific tenant. This is used during signin

- Type: String

baseline_resource_group_name

- Description: Resource group where all resources are deployed

- Type: String

key_vault_resource_name

- Description: Provide name for key-vault, The key vault will be used to store secrets that we don’t wish to store in clear text

- Type: String

automation_account_solution_name

- Description:This is the name of the automation account. NOTE: This name has to be unique

- Type: String

Communication_service_naming_convention

- Description: This is a short name that will be used in front of each of the communication services resources. Name is used for resources so you can use it if you have a naming convention etc.

- Type: String

Service_Principal_name

- Description: Service Principal name the application_id value. Used for connecting to Entra ID and collecting secrets and certificates

- Type: String

Configure notification settings

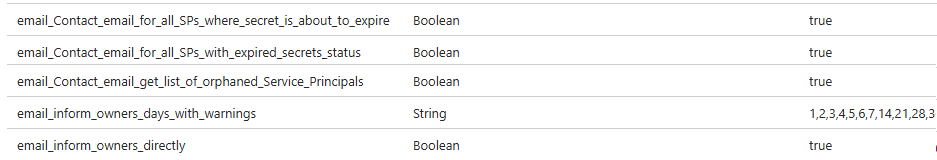

email_Contact_email_get_list_of_orphaned_Service_Principals

- Description: This will send an email to the governance team, with a list of all SP’s that does not have an owner assigned

- Type: bool

- Default: True

email_Contact_email_for_all_SPs_with_expired_secrets_status

- Description: Enable this value to notify the governance or IT team about the status of all SP’s with expired secrets or certificates

- Type: bool

- Default: true

email_Contact_email_for_all_SPs_where_secret_is_about_to_expire

- Description: This will send an email to the governance team, with a list of all SP’s where the secret is about to expire

- Type: bool

- Default: true

email_inform_owners_directly

- Description: This boolean will define wether or not owners will be contacted directly on expiring or expired secrets and certificates. All owners of the specific SP will be contacted, but owners where the secret or certificate has not yet expired will be contacted first. The owners will be contacted on the days specified in the ’email_inform_owners_days_with_warnings’ variable

- Type: bool

- Default: true

email_inform_owners_days_with_warnings

- Description: Define with a string on which days the owner of a SP should receive the notification. eg. 0,1,2 means they will receive the email on the day it expires, 1 day before and 2 days before and so on.

- Type: string

- Default: 1,2,3,4,5,6,7,14,21,28,30

secret_cert_days_to_expire

- Description: Used in powershell script: the value here defines when a secret will be reported as expiring.

- Type: String

- Default: 30

Configure notification domain settings

Important! If you plan to use your own domain, please note that you must verify the domain before connecting it to the communication service.

Follow these steps:

- Set “Communication_service_naming_domain_type” to your own domain.

- Set “Communication_service_naming_domain_created_dns_records” to false (default).

- Deploy everything with Terraform.

After deployment, verify the domain with DNS. The output will contain the required DNS entries.

Once the domain is verified, update “Communication_service_naming_domain_created_dns_records” to true and run Terraform apply again.

Communication_service_naming_domain_type

- Description: Type in your custom domain (eg. notify.contoso.com), if you want it to be the domain you are using for the solution. Leave it as ‘AzureManagedDomain’ to create a Microsoft managed domain NOTE: There are a strict quota limit on this type.

- Type: String

- Default: AzureManagedDomain

Read more about the quota limits here

Note: Microsoft won’t raise the limit for an Azure Managed Domain. The limit for a custom domain can be raised to your needs

Communication_service_naming_domain_created_dns_records

- Description: Terraform will only create the last connections if this value is set to true

- Type: bool

- Default: false

Notification e-mail sender restrictions

email_define_domains_for_owner_notification_email

- Description: When looking through owners, it will own send an e-mail if the owner is from one of these approved domains

- Type: string

- Default: “approved_domain_1,approved_domain_2,approved_domain_3”

email_define_domains_for_owner_notification_email_enable

- Description: If true, the script will look at the domains in the var.email_define_domains_for_owner_notification_email and only send e-mail to users who have an e-mail in this domain at either the primary e-mail field or the othermails field in entra ID

- Type:bool

- Default: false

email_Contact_email_for_notification_emails

- Description: This is the e-mail address that should be used to send a message about all the expiring secrets/certs where an owner could not be found: Note: they will be send as an attachement in CSV format

- Type: string

- Default: e-mail

Location settings

location

- Description: Define the datacenter where the resources should be deployed

- Type: string

- Default: sweden central

data_location_region

- Description: on creation of the communication service, a location is required. This is not a datacenter but a regio, posibilities are Africa, Asia Pacific, Australia, Brazil, Canada, Europe, France, Germany, India, Japan, Korea, Norway, Switzerland, UAE, UK and United States

- Type:string

- Default: Europe

Resource names

key_vault_secret_key_name

- Description: Identity of the secret used for the service principal that have access to see values in entra ID. This name is also used on the SP to identify the key

- Type: string

- Default: automation-audit-user-secret

logic_app_communication_service_primary_connection_string

- Description: Identity of the secret used for the communication service

- Type: string

- Default: communication-service-primary-connection-string

Deployment

Populate the required variables

Let’s get it deployed. By now, you should have downloaded the files from GitHub. Depending on your preferred editor, it should look something like this. The only file that you need to open is the variables.tf.

Ensure that you read through the description of each variable. You can do that in the previous section or in the variable page itself.

Create resources by running Terraform

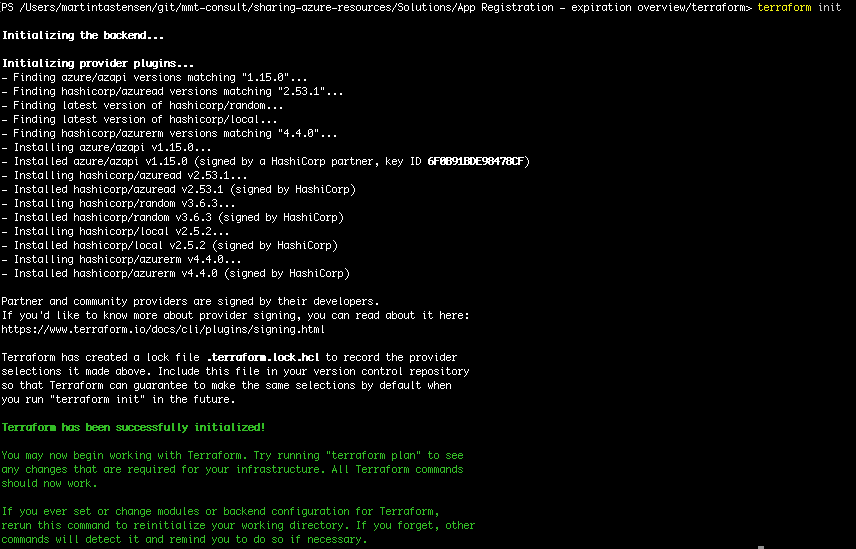

Initiate Terraform

I assume that Terraform is installed, and that it is correctly added to the path attribute (at least, required in Windows). If you have not configured Terraform yet, you can follow my guide to getting started here.

|

1 2 |

az login --tenant "6a7d68dc-9a85-46e6-9f4d-1168c4eb6b07" # Where --tenant is your own tenant id |

Run Terraform init to download the required Terraform modules, and run Terraform plan afterwards to check whether everything looks correct.

Terraform apply

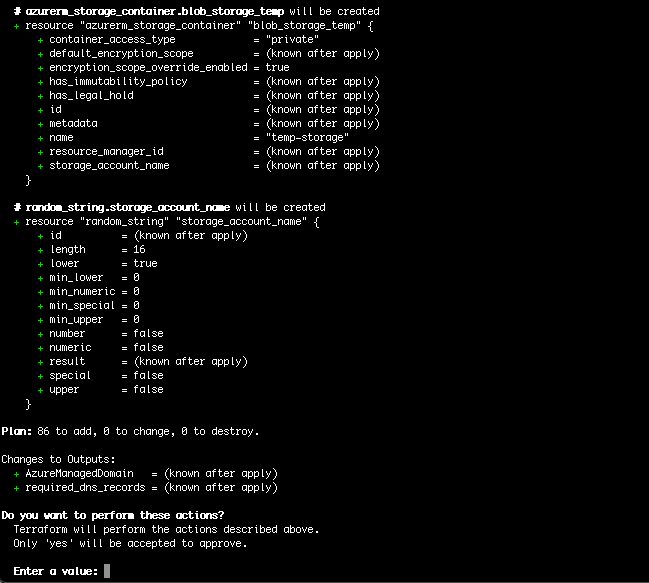

This step will inform you about all resources being created as well as notify you if you cannot create the specific resource.

Common mistakes include using underscores in resource names where these are not supported.

Please note that this process does not check for the uniqueness of names where it is required. This validation will only occur when you run Terraform apply.

It should create 86 new resources (this might differ on newer versions). Note that many of these resources are modules for the automation account.

Confirm the deployment with a yes.

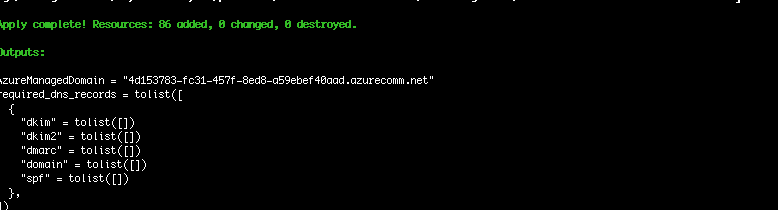

This will run for a few minutes. It should return with a message about everything being created. If not, it is usually because the resource naming does not follow the naming convention or because a resource name was not unique.

Once completed, it should look like this.

The records shown in the output are the ones that need to be created for a custom domain to be approved, but since this deployment is with an Azure-managed domain, we do not need it here.

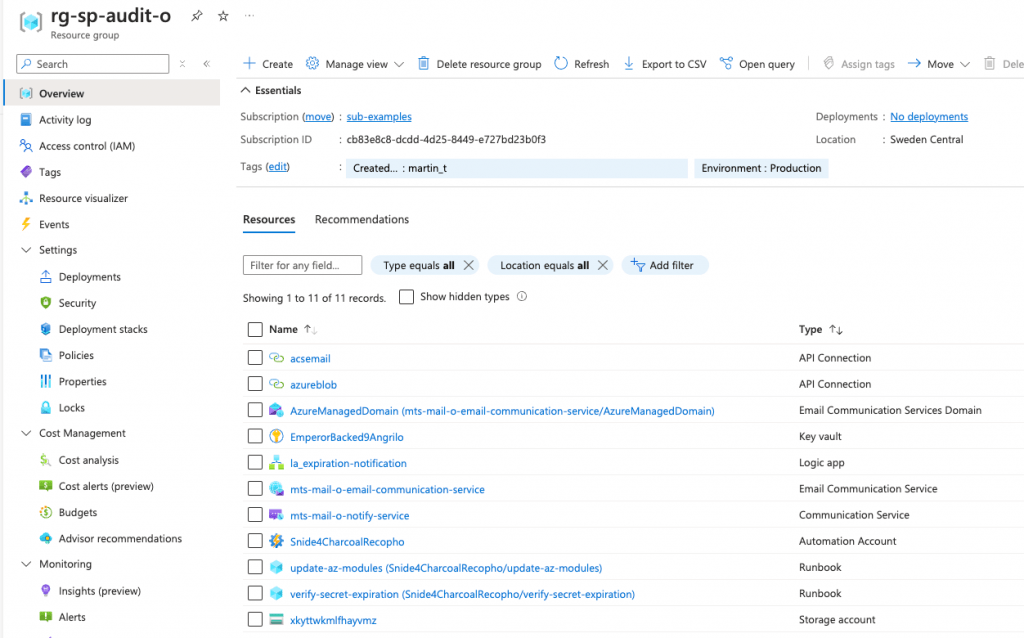

Next step is to check that the resources are created.

And it looks great.

If you do not plan to maintain the resources using Terraform, you can safely delete the Terraform files now. I recommend, however, that you maintain it using Terraform to ensure an easy upgrade to new versions when released.

Provide permissions to service principal

The next step is to grant API permissions to the Entra ID service principal. Usually, this step is handled by a specific team, and therefore, I have not automated it. If you have the necessary permissions, you can manually grant the required permissions to the service principal in Entra ID.

Update modules on automation account

Before you run the job the first time, please wait for the AZ update runbook to run once. It will start shortly after the deployment is complete.

You can run it again if you need to, but since the versions are hard-coded, it should not be nessesary.

Change which part is running

To change which part of the scripts is running, you can change the variables in variables.tf and run Terraform apply again or open the automation account –> shared resources –> variables block and change the value manually.

The script is looking at this location for the information, and the variables.tf basically just updates it here.

When running it the first time. I recommend that you disable “email_inform_owners_directly” and verify that it sends the notification e-mail and that they look correct before sending emails to the owners.

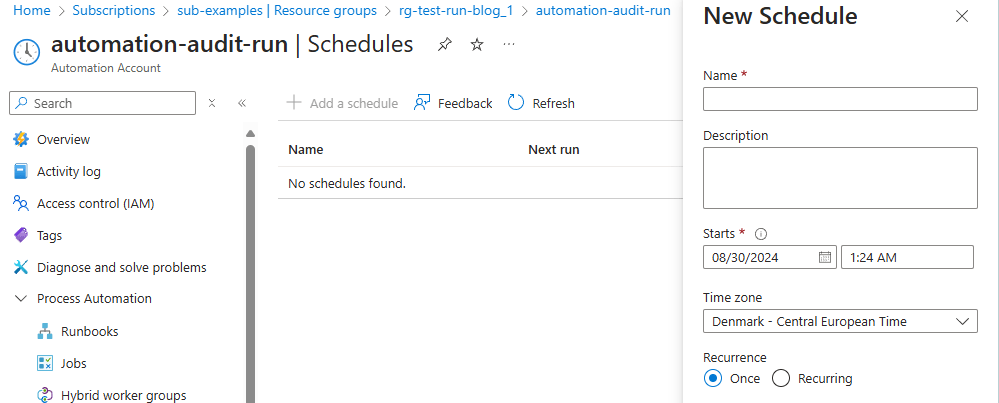

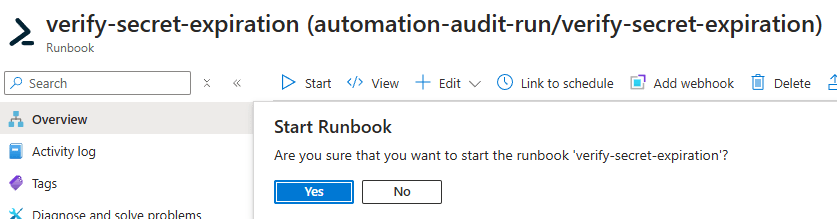

Run manually or set up schedule

I have not used Terraform on this part, although I have considered handling the schedule with Terraform.

For now, open the automation account and either run it or create a schedule in the automation account –> shared resources –> schedules and attach it to the runbook.

Alternatively, you can run the runbook manually with the start command from either PowerShell or the Azure portal.

The result

Based on the enabled features, the script will now run through all service principals and sent notifications. Let’s run through what each setting does. Each template from below can be edited in the Logic App.

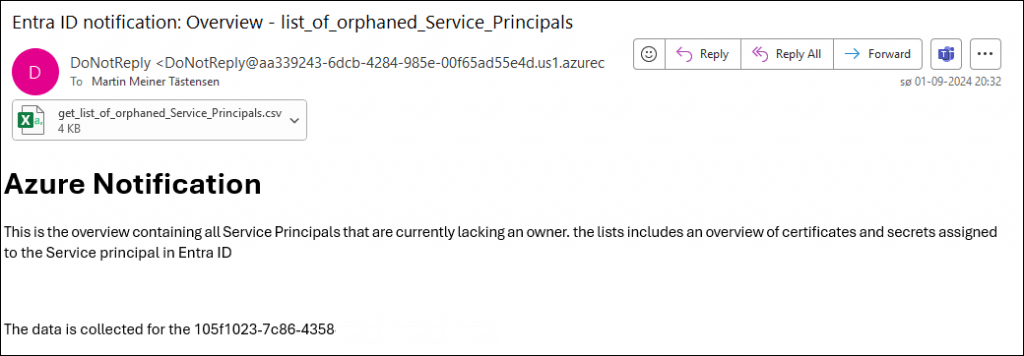

email_Contact_email_get_list_of_orphaned_Service_Principals

The defined contact will receive an email like the following.

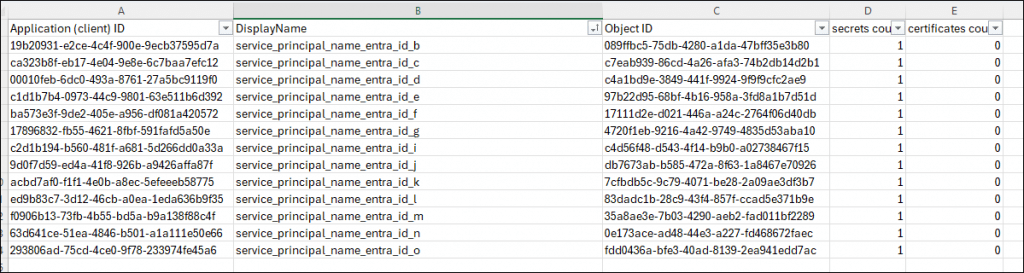

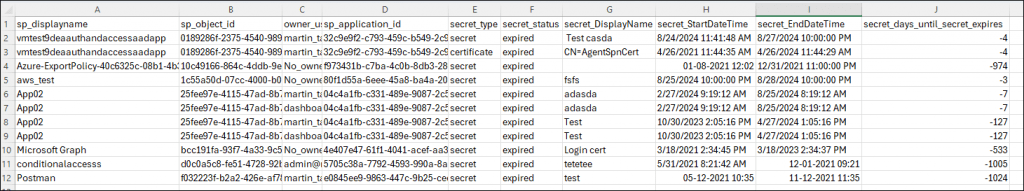

The attachment will show a simple five column overview. In this case, you should get an idea of how many times I have deployed this solution in my test tenant.

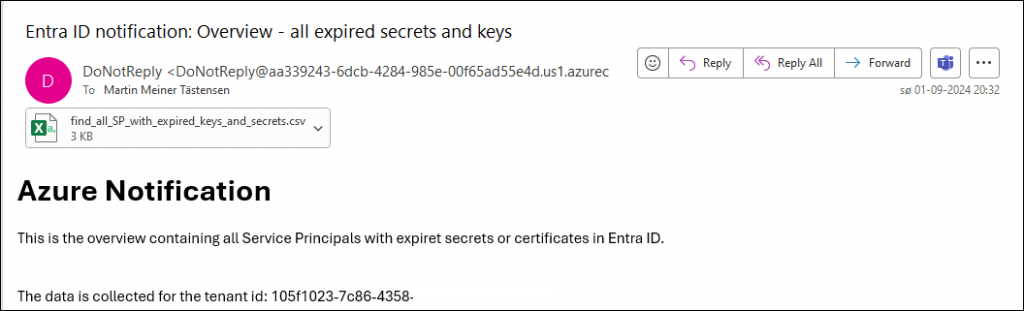

email_Contact_email_for_all_SPs_with_expired_secrets_status

Same template as above, but with a new text and subject.

The attachment contains both the orphaned service principals as well as the ones with a defined owner.

email_Contact_email_for_all_SPs_where_secret_is_about_to_expire

The last list includes all secrets and certificates that are about to but have not yet expired. The list will send items that are both with and without owners.

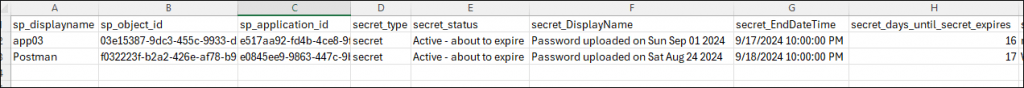

email_inform_owners_directly

An email with the following template will be sent to each owner for each service principal covering every secret or certificate that is about to expire or has already expired.

In this example, it expired seven days ago.

Future improvements

I have a lot of ideas for improvements for the future. Among these are:

- Schedule during deployment.

- Missing some details in the notification e-mails for the governance team.

- Refreshing the owner notification email, since this is currently fairly basic – close to crude.

- Overwriting the owner emails one time to test the delivery and design of the email.

- Adding a history for each service principal, registering who was owner at all times, and providing this information for the orphaned emails and as a workbook to search in.

If you have other suggestions, or if you experience issues, please feel free to reach out to me here or by creating an issue on GitHub.

0 Comments